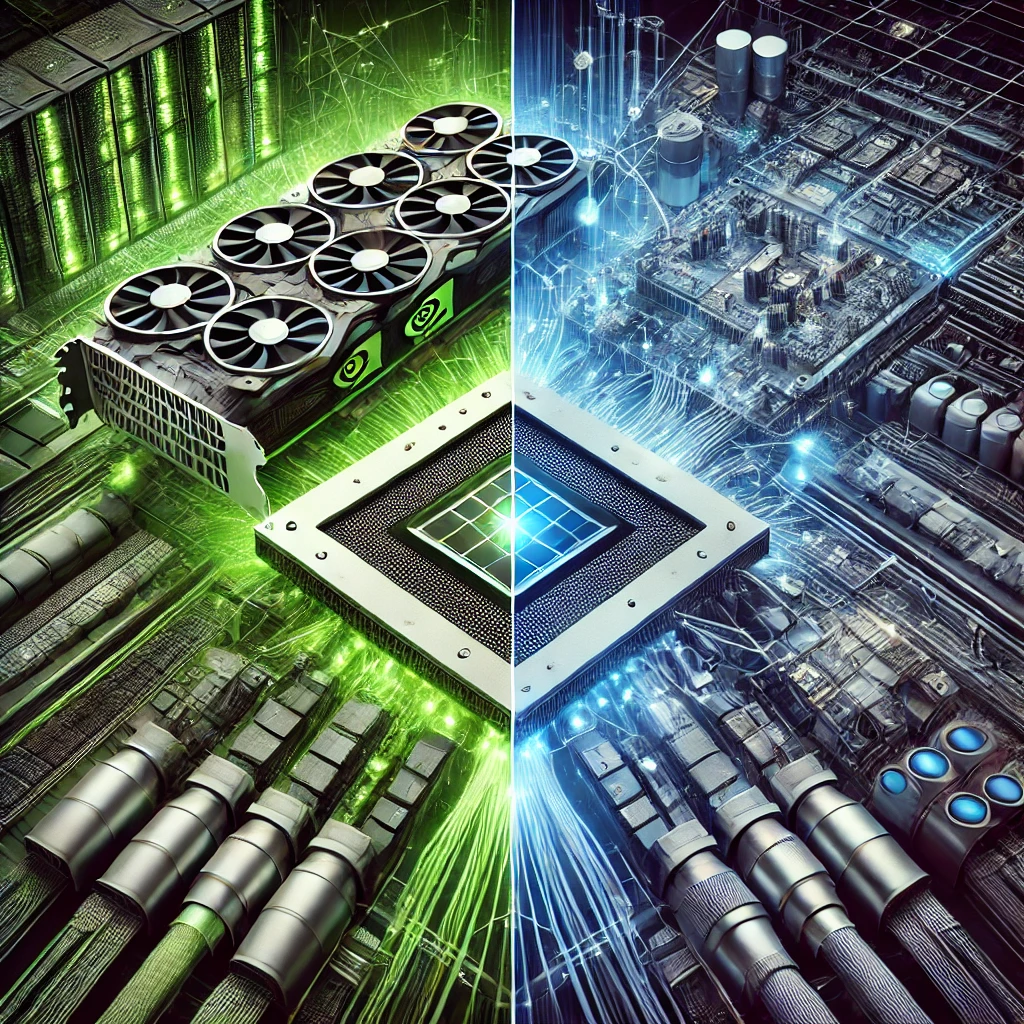

As artificial intelligence (AI) continues to reshape industries, organizations are increasingly investing in hardware that can support the massive computational demands of AI model training and inferencing. The decision between using general-purpose GPUs, such as NVIDIA’s, or purpose-built ASICs like those from Cerebras, presents a critical trade-off that depends on factors like performance, cost, and scalability. Here, I want to explore the benefits and challenges of these two technologies.

The Case for GPUs

GPUs have long been the workhorses of AI computation, originally designed for rendering graphics but now widely adapted for parallel computing tasks such as AI training and inferencing.

Benefits

- Versatility: GPUs excel in general-purpose computing. This flexibility allows them to handle a wide variety of workloads—from deep learning to high-performance computing tasks—making them ideal for diverse AI applications.

- Ecosystem Support: NVIDIA’s CUDA platform and its extensive libraries, like cuDNN and TensorRT, provide robust support for developers. The ecosystem enables quick deployment, optimized performance, and easier scaling.

- Scalability: GPUs can be deployed in a distributed manner across large-scale data centers. Modern frameworks like NVIDIA’s DGX SuperPOD offer scalable architectures for enterprise AI.

- Community and Compatibility: The widespread adoption of GPUs means there is a large developer community, extensive documentation, and broad support across machine learning frameworks like TensorFlow and PyTorch.

Challenges

- Efficiency: General-purpose GPUs are not always the most efficient for specific AI tasks. They consume significant power and may underperform compared to purpose-built hardware in narrow applications.

- Cost: While GPUs are versatile, their cost—both in hardware and energy consumption—can be high for large-scale AI operations.

- Latency: For low-latency inferencing in edge scenarios, GPUs may fall short compared to specialized hardware.

The Case for ASICs

Application-Specific Integrated Circuits (ASICs) like Cerebras’ CS-2 represent a paradigm shift. These chips are designed specifically for AI workloads, particularly deep learning.

Benefits

- Unmatched Efficiency: ASICs are optimized for specific tasks, providing higher computational efficiency and lower power consumption compared to general-purpose GPUs. For example, Cerebras’ wafer-scale engine is tailored for training large language models, offering unparalleled speed and efficiency.

- Performance for Large Models: ASICs like the Cerebras CS-2 excel at handling massive AI models with billions of parameters. The unique architecture reduces bottlenecks caused by memory and data movement.

- Reduced Latency: ASICs often deliver lower latency for inferencing tasks, making them suitable for real-time AI applications.

- Space Efficiency: By integrating purpose-built chips into compact designs, ASICs can save valuable data center space while delivering exceptional performance.

Challenges

- Limited Flexibility: ASICs are designed for specific workloads, which limits their versatility. Organizations with varied AI needs may find them less useful than GPUs.

- Development Time and Cost: Developing an ASIC is a complex and costly process, requiring significant upfront investment. This also makes them less adaptable to evolving AI frameworks and algorithms.

- Ecosystem Maturity: Unlike NVIDIA GPUs, ASICs often lack a mature software ecosystem, developer tools, and community support. This can increase the time to implement and optimize solutions.

- Scalability Constraints: While ASICs are powerful for specific tasks, scaling their deployment across diverse workloads can be challenging.

Choosing the Right Hardware

The choice between GPUs and ASICs largely depends on the use case and operational priorities:

- Versatility and Broad Use Cases: Organizations with a wide range of AI workloads may find GPUs more advantageous due to their flexibility and ecosystem.

- Niche Performance: For organizations focusing on specific tasks like large-scale model training or real-time inferencing, ASICs like Cerebras can deliver unparalleled performance.

- Budget and Scalability: GPUs may be more cost-effective for startups or organizations that need scalability without high upfront investments. In contrast, enterprises with the capital to invest in purpose-built ASICs can realize efficiency gains over time.

- Energy Efficiency: For organizations prioritizing energy efficiency and sustainability, ASICs provide a clear advantage.

The Future of AI Hardware

As AI models grow in complexity and scale, the hardware landscape will likely continue to diversify. GPUs will remain dominant in general-purpose computing and prototyping, while ASICs will carve out a niche in high-performance, specialized applications. Innovations in both fields will drive advancements, ensuring that organizations have the tools they need to stay competitive in the AI-driven future.

Ultimately, the decision comes down to aligning hardware capabilities with the specific needs of the AI workload, budget, and long-term strategic goals. By carefully weighing the pros and cons of GPUs and ASICs, organizations can optimize their AI infrastructure for success.

Next Steps

Are you ready to future-proof your AI infrastructure? Whether you’re scaling with GPUs or investing in cutting-edge ASICs, having the right strategy is key to staying competitive. Contact Colossal today to discuss how we can help you choose and implement the optimal hardware solutions tailored to your unique AI needs.

Leave a Comment